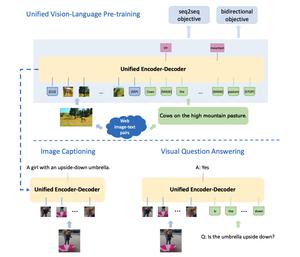

An Overview Of Vision And Language Pre Trained Models Papers With Code Involves models that adapt pre training to the field of vision and language (v l) learning and improve the performance on downstream tasks like visual question answering and visual captioning. In this paper, we review the recent progress in vision language pre trained models (vl ptms). as the core content, we first briefly introduce several ways to encode raw images and texts to single modal embeddings before pre training.

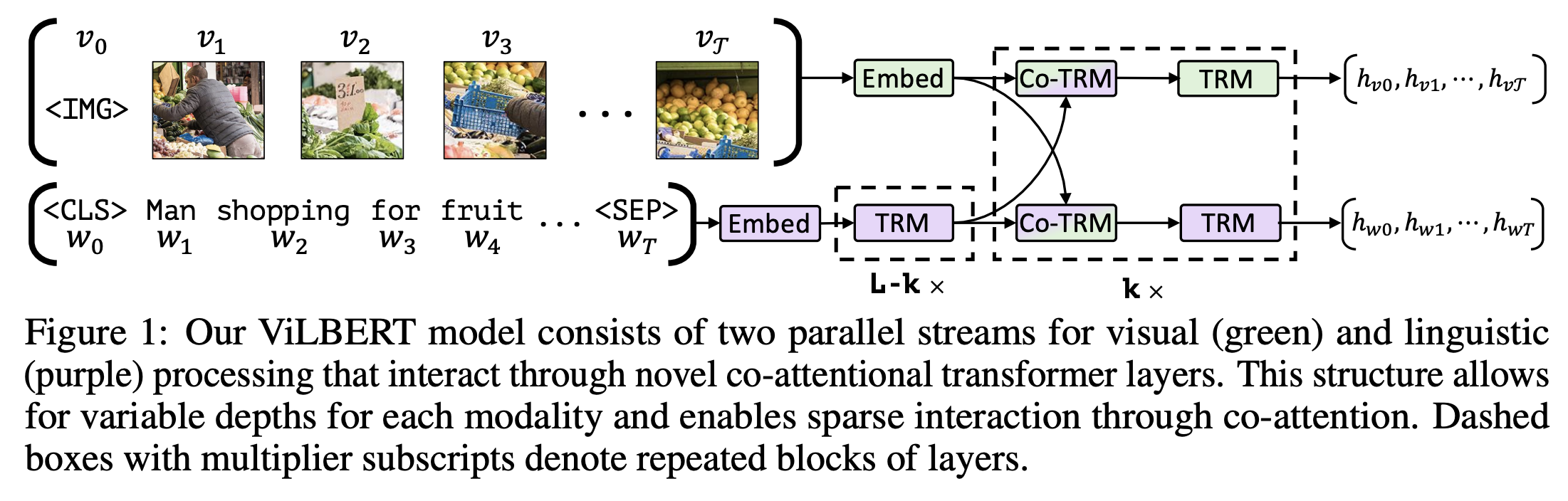

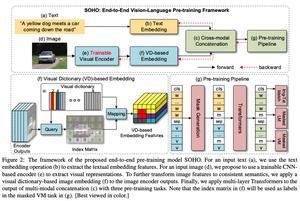

An Overview Of Vision And Language Pre Trained Models Papers With Code In this paper, we present an overview of the major advances achieved in vlpms for producing joint representations of vision and language. as the preliminaries, we briefly describe the general task definition and genetic architecture of vlpms. A most frontend collection and survey of vision language model papers, and models github repository. below we compile awesome papers and model and github repositories that. state of the art vlms collection of newest to oldest vlms (we'll keep updating new models and benchmarks). To fill this gap, this paper surveys the landscape of language and vision pre training from the lens of multimodal machine translation. we summarize the common architectures, pre training objectives, and datasets from literature and conjecture what further is needed to make progress on multimodal machine translation. In this paper, we present an overview of the major advances achieved in vlpms for producing joint representations of vi sion and language. as the preliminaries, we briefly describe the general task definition and genetic ar chitecture of vlpms.

An Overview Of Vision And Language Pre Trained Models Papers With Code To fill this gap, this paper surveys the landscape of language and vision pre training from the lens of multimodal machine translation. we summarize the common architectures, pre training objectives, and datasets from literature and conjecture what further is needed to make progress on multimodal machine translation. In this paper, we present an overview of the major advances achieved in vlpms for producing joint representations of vi sion and language. as the preliminaries, we briefly describe the general task definition and genetic ar chitecture of vlpms. Contrastive language image pre training (clip) is an essential component of building modern vision language foundation models. while clip demonstrates remarkable zero shot performance on downstream tasks, the multi modal feature spaces still suffer from a modality gap, which is a gap between image and text feature clusters and limits downstream task performance. This paper provides a systematic review of visual language models for various visual recognition tasks, including: (1) the background that introduces the development of visual recognition paradigms; (2) the foundations of vlm that summarize the widely adopted network architectures, pre training objectives, and downstream tasks; (3) the widely. In depth analysis, a closer look at the robustness of vision and language pre trained models, arxiv 2020 12. adversarial training, large scale adversarial training for vision and language representation learning, neurips 2020 spotlight. adaptive analysis, adaptive transformers for learning multimodal representations, acl srw 2020. Vision language models are integral to computer vision research, yet many high performing models remain closed source, obscuring their data, design and training recipe. the research community has responded by using distillation from black box models to label training data, achieving strong benchmark results, at the cost of measurable scientific.

An Overview Of Vision And Language Pre Trained Models Papers With Code Contrastive language image pre training (clip) is an essential component of building modern vision language foundation models. while clip demonstrates remarkable zero shot performance on downstream tasks, the multi modal feature spaces still suffer from a modality gap, which is a gap between image and text feature clusters and limits downstream task performance. This paper provides a systematic review of visual language models for various visual recognition tasks, including: (1) the background that introduces the development of visual recognition paradigms; (2) the foundations of vlm that summarize the widely adopted network architectures, pre training objectives, and downstream tasks; (3) the widely. In depth analysis, a closer look at the robustness of vision and language pre trained models, arxiv 2020 12. adversarial training, large scale adversarial training for vision and language representation learning, neurips 2020 spotlight. adaptive analysis, adaptive transformers for learning multimodal representations, acl srw 2020. Vision language models are integral to computer vision research, yet many high performing models remain closed source, obscuring their data, design and training recipe. the research community has responded by using distillation from black box models to label training data, achieving strong benchmark results, at the cost of measurable scientific.