Hierarchical Transformers

Github Coastalcph Hierarchical Transformers Hierarchical Attention In this article, we look at hierarchical transformers: what they are, how they work, how they differ from standard transformers and what are their benefits. let’s get started. D sparse transformers by leveraging structured factorization. this work, sim ilarly to papers mentioned above on efficient trans formers, concentrates on speeding up the attention component, while the most important feature of the hourglass arch.

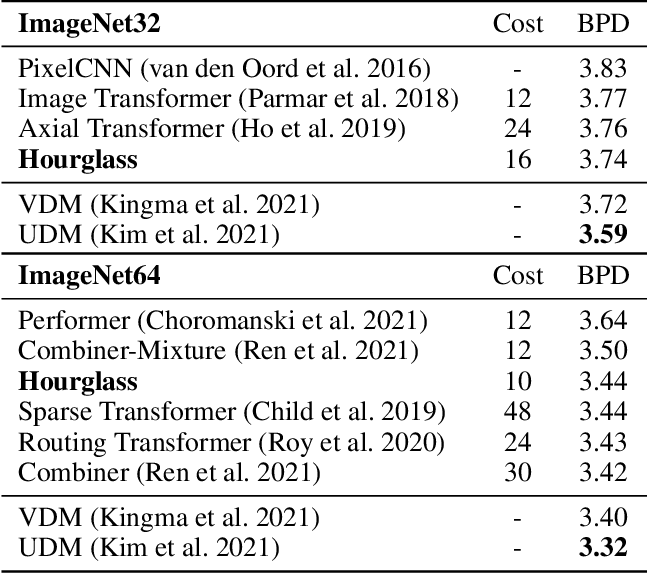

Hierarchical Transformers Are More Efficient Language Models In this paper, we present a general strategy to convert transformer based sr networks to hierarchical transformers (hit sr), boosting sr performance with multi scale features while maintaining an efficient design. We use the best performing upsampling and downsampling layers to create hourglass a hierarchical transformer language model. hourglass improves upon the transformer baseline given the same amount of computation and can yield the same results as transformers more efficiently. Transformer models demonstrate notable efectiveness in numerous nlp and sequence modeling tasks. their ability to process relatively long sequences enables them to generate lengthy, coherent outputs, such as complete paragraphs by gpt 3 or intricately organized images by dall e. This paper introduces a hierarchical transformer architecture to handle long sequences efficiently. the first half of the transformer layers down sample tokens and the second half up samples with direct skip connections between layers of the same resolution.

Hierarchical Transformers Model Download Scientific Diagram Transformer models demonstrate notable efectiveness in numerous nlp and sequence modeling tasks. their ability to process relatively long sequences enables them to generate lengthy, coherent outputs, such as complete paragraphs by gpt 3 or intricately organized images by dall e. This paper introduces a hierarchical transformer architecture to handle long sequences efficiently. the first half of the transformer layers down sample tokens and the second half up samples with direct skip connections between layers of the same resolution. To summarize, hierarchical transformers navigate through the challenges of handling long sequences and high memory usage by employing a hierarchical, autoregressive approach to manage and process sequence data efficiently and effectively. In this article, we look at hierarchical transformers: what they are, how they work, how they differ from standard transformers and what are their benefits. let’s get started. A hierarchical transformer architecture is a class of neural network model distinguished by its explicit multi level structure, which enables information processing and representation learning at several granularities in parallel or in sequence. Hierarchical transformers pave the way for nlp systems that are more robust, flexible, and generalizable across languages and domains.

Hit Building Mapping With Hierarchical Transformers Deepai To summarize, hierarchical transformers navigate through the challenges of handling long sequences and high memory usage by employing a hierarchical, autoregressive approach to manage and process sequence data efficiently and effectively. In this article, we look at hierarchical transformers: what they are, how they work, how they differ from standard transformers and what are their benefits. let’s get started. A hierarchical transformer architecture is a class of neural network model distinguished by its explicit multi level structure, which enables information processing and representation learning at several granularities in parallel or in sequence. Hierarchical transformers pave the way for nlp systems that are more robust, flexible, and generalizable across languages and domains.

Hierarchical Transformers Structure Download Scientific Diagram A hierarchical transformer architecture is a class of neural network model distinguished by its explicit multi level structure, which enables information processing and representation learning at several granularities in parallel or in sequence. Hierarchical transformers pave the way for nlp systems that are more robust, flexible, and generalizable across languages and domains.

Hierarchical Transformers Structure Download Scientific Diagram

Comments are closed.