Pdf Llm Powered Data Augmentation For Enhanced Crosslingual Performance

Llm Powered Data Augmentation For Enhanced Crosslingual Performance View a pdf of the paper titled llm powered data augmentation for enhanced cross lingual performance, by chenxi whitehouse and 2 other authors. This paper explores the potential of leveraging large language models (llms) for data aug mentation in multilingual commonsense rea soning datasets where the available training data is extremely limited.

Llm Powered Data Augmentation For Enhanced Crosslingual Performance This paper explores the potential of leveraging large language models (llms) for data augmentation in multilingual commonsense reasoning datasets where the available training data is extremely limited. Abstract: this paper explores the potential of leveraging large language models (llms) for data augmentation in multilingual commonsense reasoning datasets where the available training data is extremely limited. In the era of artificial intelligence, data is gold but costly to annotate. the paper demonstrates a groundbreaking solution to this dilemma using chatgpt for text augmentation in sentiment. How do we generate more data with llms? which llms do we use? some languages are surprisingly bad, such as tamil! llm powered data augmentation is promising!.

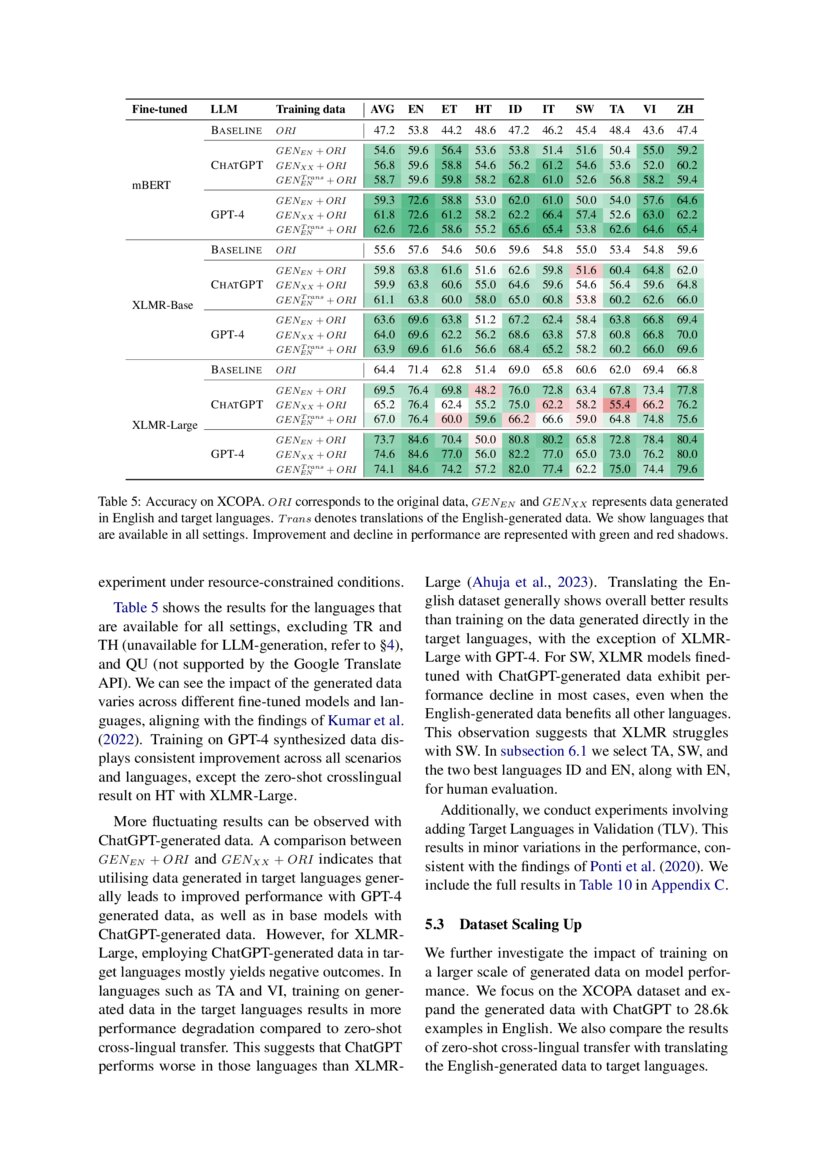

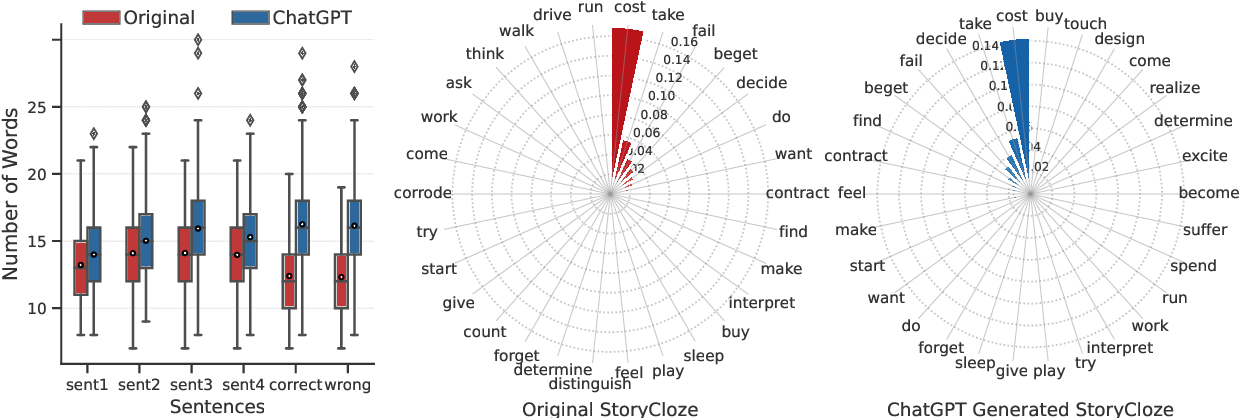

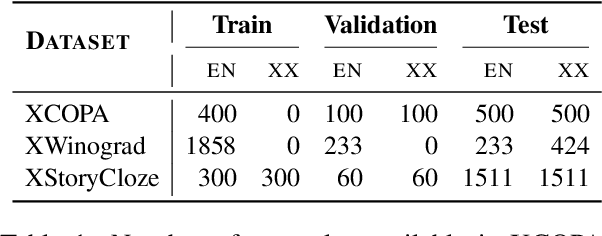

Pdf Llm Powered Data Augmentation For Enhanced Crosslingual Performance In the era of artificial intelligence, data is gold but costly to annotate. the paper demonstrates a groundbreaking solution to this dilemma using chatgpt for text augmentation in sentiment. How do we generate more data with llms? which llms do we use? some languages are surprisingly bad, such as tamil! llm powered data augmentation is promising!. This paper explores the potential of leveraging large language models (llms) for data augmentation in multilingual commonsense reasoning datasets where the available training data is extremely limited. Le training data is extremely limited. to achieve this, we utilise several llms, namely dolly v2, sta blevicuna, chatgpt, and gpt 4, to augment three datase. s: xcopa, xwinograd, and xs torycloze. subsequently, we evaluate the ef fectiveness of fine tuning smaller multilingual models, mbert. This paper aims to explore the potential of leveraging large language models (llms) for data augmentation in crosslingual commonsense reasoning datasets, where the available training data is extremely limited. This paper explores the potential of leveraging large language models (llms) for data augmentation in multilingual commonsense reasoning datasets where the available training data is extremely limited.

Table 1 From Llm Powered Data Augmentation For Enhanced Crosslingual This paper explores the potential of leveraging large language models (llms) for data augmentation in multilingual commonsense reasoning datasets where the available training data is extremely limited. Le training data is extremely limited. to achieve this, we utilise several llms, namely dolly v2, sta blevicuna, chatgpt, and gpt 4, to augment three datase. s: xcopa, xwinograd, and xs torycloze. subsequently, we evaluate the ef fectiveness of fine tuning smaller multilingual models, mbert. This paper aims to explore the potential of leveraging large language models (llms) for data augmentation in crosslingual commonsense reasoning datasets, where the available training data is extremely limited. This paper explores the potential of leveraging large language models (llms) for data augmentation in multilingual commonsense reasoning datasets where the available training data is extremely limited.

Comparing Llm Prompting With Cross Lingual Transfer Performance On This paper aims to explore the potential of leveraging large language models (llms) for data augmentation in crosslingual commonsense reasoning datasets, where the available training data is extremely limited. This paper explores the potential of leveraging large language models (llms) for data augmentation in multilingual commonsense reasoning datasets where the available training data is extremely limited.

Comments are closed.