Data Scraping Powerpoint And Google Slides Template Ppt Slides Creating a new scrapy project. writing a spider to crawl a site and extract data. exporting the scraped data using the command line. changing spider to recursively follow links. using spider arguments. scrapy is written in python. the more you learn about python, the more you can get out of scrapy. Slides that are overloaded with text, clip art, colors, sounds, and or movement are a distraction to the audience and can decrease the overall effectiveness of the presentation. here are some basic principles to guide you in simplifying your powerpoint prezi slides: limit the amount of text you include on a single slide.

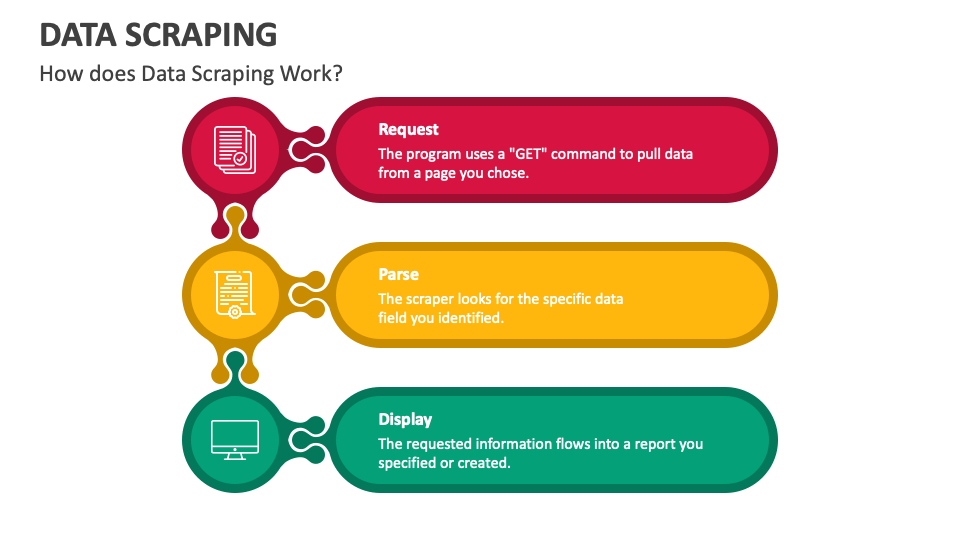

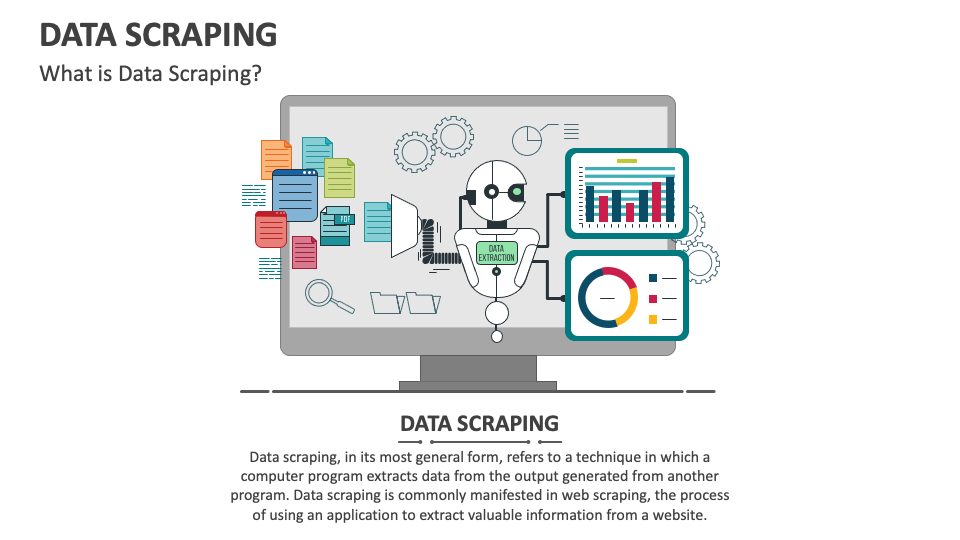

Data Scraping Powerpoint And Google Slides Template Ppt Slides In this guide, i‘m excited to walk you through the key things you need to use scrapy effectively. i‘ll be sharing lots of real world examples, code snippets, visuals and hard earned advice – all explained in simple terms. Scrapy is a fast, open source python framework for scraping web pages and extracting structured data using xpath selectors. it can build and scale large crawling projects easily, handles requests asynchronously to be fast, and automatically adjusts crawling speed using auto throttling. 6 scrapy tutorial this tutorial will walk you through these tasks: 1. creating a new scrapy project 2. writing a spider to crawl a site and extract data 3. exporting the scraped data using the command line 4. changing spider to recursively follow links 5. using spider arguments. The presentation covered what web scraping is, the workflow of a web scraper, useful libraries for scraping including beautifulsoup, lxml, and re, and advantages of scraping over using an api. web scraping involves getting a website using http requests, parsing the html document using a parsing library, and storing the results.

Data Scraping Powerpoint And Google Slides Template Ppt Slides 6 scrapy tutorial this tutorial will walk you through these tasks: 1. creating a new scrapy project 2. writing a spider to crawl a site and extract data 3. exporting the scraped data using the command line 4. changing spider to recursively follow links 5. using spider arguments. The presentation covered what web scraping is, the workflow of a web scraper, useful libraries for scraping including beautifulsoup, lxml, and re, and advantages of scraping over using an api. web scraping involves getting a website using http requests, parsing the html document using a parsing library, and storing the results. Quick summary: this blog takes you through an overview of web scraping, how to extract data with python’s web scraping and its advantages for it companies along with a detailed list of 7 best python libraries you can utilize. for web scraping, including beautiful soup, scrapy, selenium & more with their features, advantages, and disadvantages. This guide serves as a concise tutorial for setting up your scrapy project and getting started with web scraping. for a more comprehensive understanding, you may want to look for a scrapy tutorial pdf that covers advanced topics and best practices. Welcome to zenva’s tutorial on scrapy, an incredibly useful python library that allows you to create web scrapers with ease. whether you’re new to coding or an experienced programmer, this guide will show you just how engaging scrapy can be, demonstrating its practical value while making the learning process as accessible as possible. This document introduces scrapy, an open source and collaborative framework for extracting data from websites. it discusses what scrapy is used for, its advantages over alternatives like beautiful soup, and provides steps to install scrapy and create a sample.