Rag Versus Llm Fine Tuning

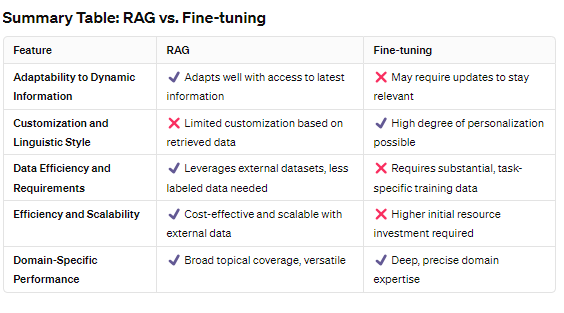

Fine Tuning Vs Rag Guide On Choosing The Right Ai Approach Retrieval augmented generation (rag) is the process of optimizing the output of a large language model, so it references an authoritative knowledge base outside of its training data sources before generating a response. Retrieval augmented generation (rag) enhances large language models (llms) by incorporating an information retrieval mechanism that allows models to access and utilize additional data beyond their original training set.

Fine Tuning An Llm Vs Rag What S Best For Your Corporate Chatbot Retrieval augmented generation (rag) is an innovative approach in the field of natural language processing (nlp) that combines the strengths of retrieval based and generation based models to enhance the quality of generated text. why is retrieval augmented generation important?. Traditional rag models typically retrieve isolated facts from unstructured data sources. in contrast, graph rag leverages structured data from knowledge graphs, enabling it to handle complex queries more effectively. Rag stands for retrieval augmented generation. think of it as giving your ai a specific relevant documents (or chunks) that it can quickly scan through to find relevant information before answering your questions. Retrieval augmented generation (rag) is an architecture for optimizing the performance of an artificial intelligence (ai) model by connecting it with external knowledge bases. rag helps large language models (llms) deliver more relevant responses at a higher quality.

Llm Customizations Prompt Engineering Rag Fine Tuning Crucial Bits Rag stands for retrieval augmented generation. think of it as giving your ai a specific relevant documents (or chunks) that it can quickly scan through to find relevant information before answering your questions. Retrieval augmented generation (rag) is an architecture for optimizing the performance of an artificial intelligence (ai) model by connecting it with external knowledge bases. rag helps large language models (llms) deliver more relevant responses at a higher quality. Efficient, right? this is how retrieval augmented generation (rag) works. think of rag as the ai equivalent of that brilliant librarian who doesn’t just know where to look for answers but also crafts a coherent response tailored to your needs. Explore the rag frameworks and tools, what rag is, how it works, its benefits, and the current situation in the llm landscape. we used our methodology to benchmark 11 different llms and evaluate the impact of rag parameters, such as chunk size and embedding models. Retrieval augmented generation (rag) is a technique for enhancing the accuracy and reliability of generative ai models with facts fetched from external sources. Retrieval augmented generation (rag) is changing how ai systems understand and generate accurate, timely, and context rich responses. by combining large language models (llms) with real time document retrieval, rag connects static training data with changing, evolving knowledge. whether you are building a chatbot, search assistant, or enterprise knowledge tool, this complete guide will explain.

Llm Customizations Prompt Engineering Rag Fine Tuning Crucial Bits Efficient, right? this is how retrieval augmented generation (rag) works. think of rag as the ai equivalent of that brilliant librarian who doesn’t just know where to look for answers but also crafts a coherent response tailored to your needs. Explore the rag frameworks and tools, what rag is, how it works, its benefits, and the current situation in the llm landscape. we used our methodology to benchmark 11 different llms and evaluate the impact of rag parameters, such as chunk size and embedding models. Retrieval augmented generation (rag) is a technique for enhancing the accuracy and reliability of generative ai models with facts fetched from external sources. Retrieval augmented generation (rag) is changing how ai systems understand and generate accurate, timely, and context rich responses. by combining large language models (llms) with real time document retrieval, rag connects static training data with changing, evolving knowledge. whether you are building a chatbot, search assistant, or enterprise knowledge tool, this complete guide will explain.

Rag Vs Fine Tuning Which One Is Right For You Retrieval augmented generation (rag) is a technique for enhancing the accuracy and reliability of generative ai models with facts fetched from external sources. Retrieval augmented generation (rag) is changing how ai systems understand and generate accurate, timely, and context rich responses. by combining large language models (llms) with real time document retrieval, rag connects static training data with changing, evolving knowledge. whether you are building a chatbot, search assistant, or enterprise knowledge tool, this complete guide will explain.

Rag Vs Finetuning Which Approach Is The Best For Llms

Comments are closed.