Keeping Llms Relevant A Practical Guide To Rag And Fine Tuning Rag requires more computational power and memory to serve user queries, compared to llms without rag. this can lead to higher operational costs, especially when scaling for widespread use. fine tuning is a computationally intensive task, but it is performed only once and can be leveraged for a large number of user queries. Let’s examines when to use rag versus fine tuning for llms, smaller models, and pre trained models. we’ll cover: brief background on llms and rag; rag advantages over fine tuning.

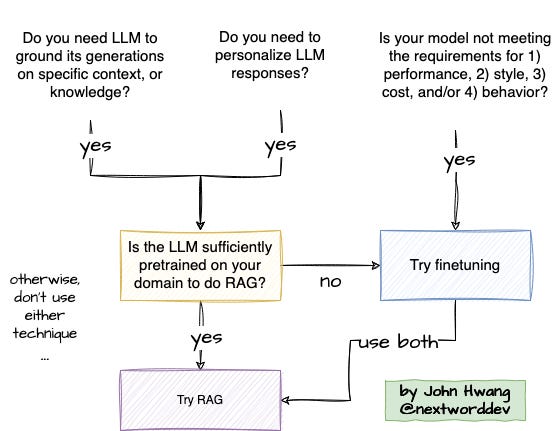

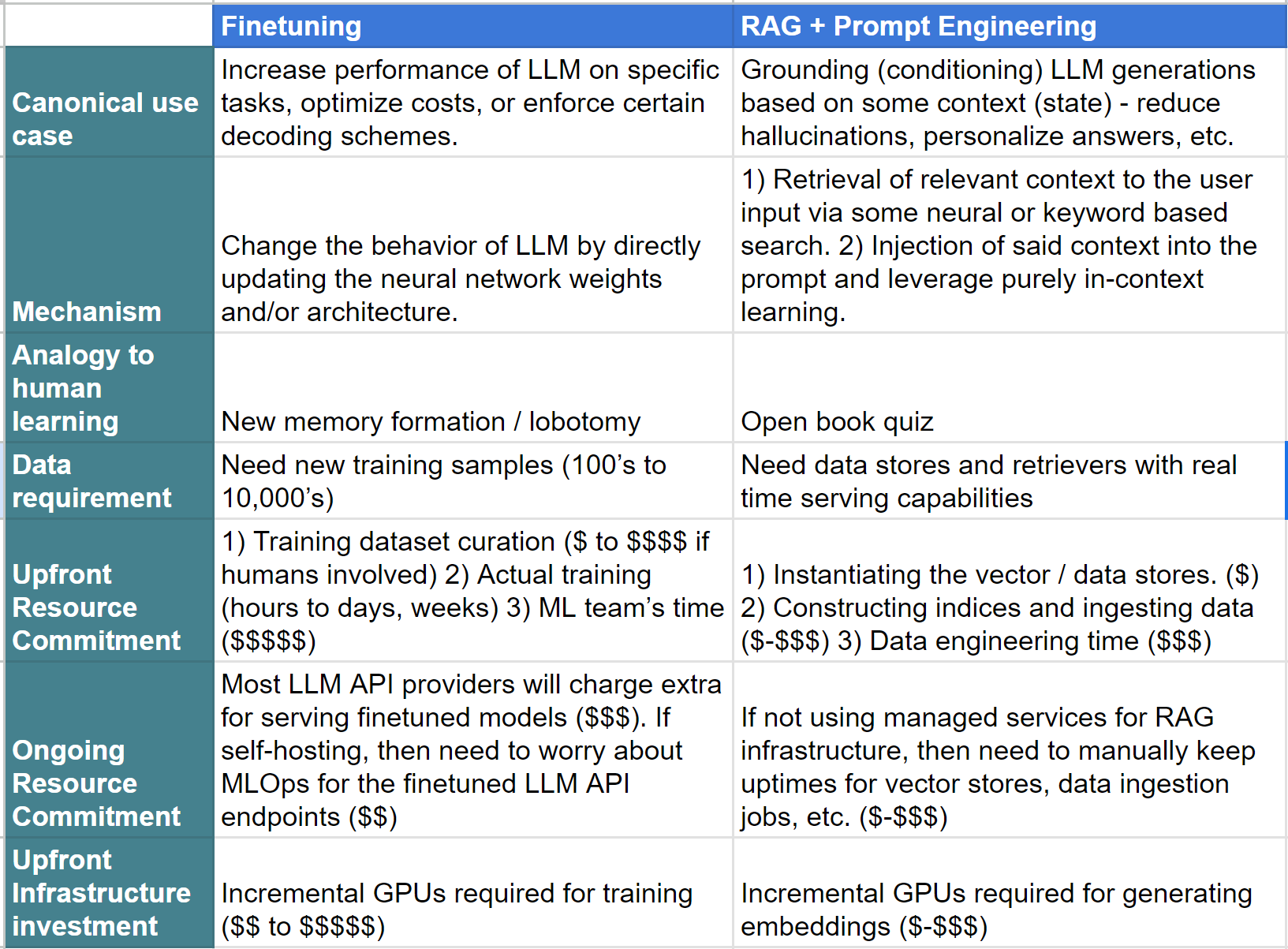

Rag Vs Finetuning Llms What To Use When And Why Rag and fine tuning techniques improve the response generation for domain specific queries, but they are inherently completely different techniques. let's learn about them. retrieval augmented generation is a process where large language models like gpt 4o become context aware using external data sources. According to a 2024 interview with maxime beauchemin, creator of apache airflow and superset, rag has proven effective in enabling ai powered capabilities in business intelligence tools. on the other hand, fine tuning shines in highly specialized tasks or when aiming for a smaller, more efficient model. When deciding between rag and finetuning, a crucial factor to consider is the volume of domain or task specific, labelled training data at our disposal. finetuning an llm to adapt to specific tasks or domains is heavily dependent on the quality and quantity of the labelled data available. When it comes to enhancing the capabilities of large language models (llms), two powerful techniques stand out: rag (retrieval augmented generation) and fine tuning. both methods have their strengths and are suited for different use cases, but choosing the right approach depends on your specific needs.

Rag Vs Finetuning Llms What To Use When And Why When deciding between rag and finetuning, a crucial factor to consider is the volume of domain or task specific, labelled training data at our disposal. finetuning an llm to adapt to specific tasks or domains is heavily dependent on the quality and quantity of the labelled data available. When it comes to enhancing the capabilities of large language models (llms), two powerful techniques stand out: rag (retrieval augmented generation) and fine tuning. both methods have their strengths and are suited for different use cases, but choosing the right approach depends on your specific needs. Both fine tuning and rag make general purpose llms more useful, but they do it in different ways. a simple analogy is that fine tuning an llm gives it a deeper understanding of a particular domain, such as medicine or education, while pairing the llm with a rag architecture gives it access to up to date, local data for its responses. Rag: ideal when the domain knowledge is large, dynamic, or constantly updated (e.g., legal regulations, financial reports). example: a legal assistant fetching the latest rulings or case laws from a database. fine tuning: best for scenarios where the knowledge is stable and well defined (e.g., customer service scripts, faqs). While rag involves providing external and dynamic resources to trained models, fine tuning involves further training on specialized datasets, altering the model. each approach can be used for different use cases. in this blog post, we explain each approach, compare the two and recommend when to use them and which pitfalls to avoid. Rag excels when your application demands information from outside sources like databases or documents. by design, rag fetches this information to assist the llm in generating responses .

Augmenting Llms Fine Tuning Or Rag Both fine tuning and rag make general purpose llms more useful, but they do it in different ways. a simple analogy is that fine tuning an llm gives it a deeper understanding of a particular domain, such as medicine or education, while pairing the llm with a rag architecture gives it access to up to date, local data for its responses. Rag: ideal when the domain knowledge is large, dynamic, or constantly updated (e.g., legal regulations, financial reports). example: a legal assistant fetching the latest rulings or case laws from a database. fine tuning: best for scenarios where the knowledge is stable and well defined (e.g., customer service scripts, faqs). While rag involves providing external and dynamic resources to trained models, fine tuning involves further training on specialized datasets, altering the model. each approach can be used for different use cases. in this blog post, we explain each approach, compare the two and recommend when to use them and which pitfalls to avoid. Rag excels when your application demands information from outside sources like databases or documents. by design, rag fetches this information to assist the llm in generating responses .

Augmenting Llms Fine Tuning Or Rag While rag involves providing external and dynamic resources to trained models, fine tuning involves further training on specialized datasets, altering the model. each approach can be used for different use cases. in this blog post, we explain each approach, compare the two and recommend when to use them and which pitfalls to avoid. Rag excels when your application demands information from outside sources like databases or documents. by design, rag fetches this information to assist the llm in generating responses .

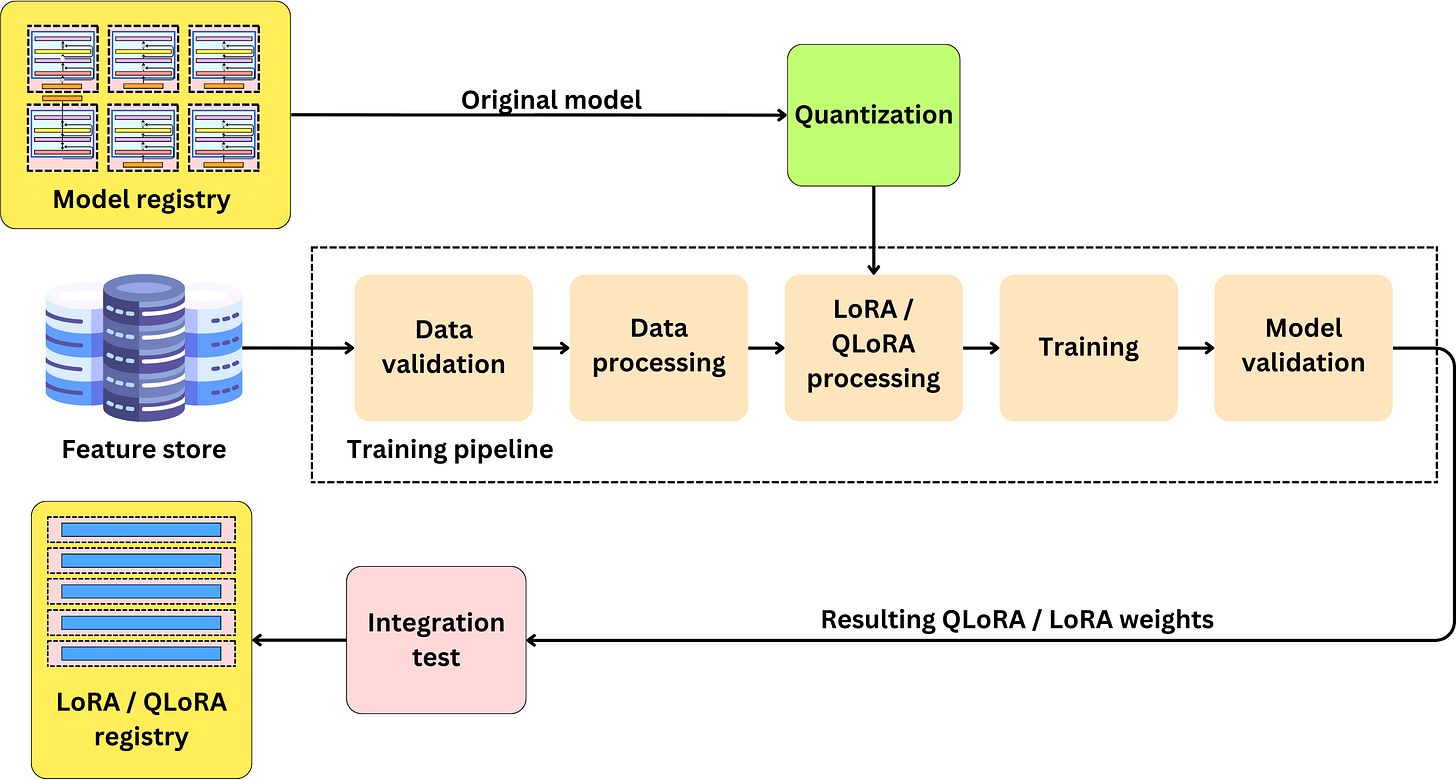

Why Finetuning Llms Large Language Models Llms Like Bert Gpt 3 Gpt