The Future Of Ai And Its Impact On Human Interaction Yobi By synthesizing a range of theoretical frameworks and application domains, this thesis aims to advance the machine learning foundations of multisensory ai. in the first part, we present a theoretical framework formalizing how modalities interact with each other to give rise to new information for a task. Emerging multisensory technologies from computer science, engineering, and human–computer interaction (hci 1) enable new ways to stimulate, replicate, and control sensory signals (touch, taste, and smell). therefore, they could expand the possibilities for multisensory integration research.

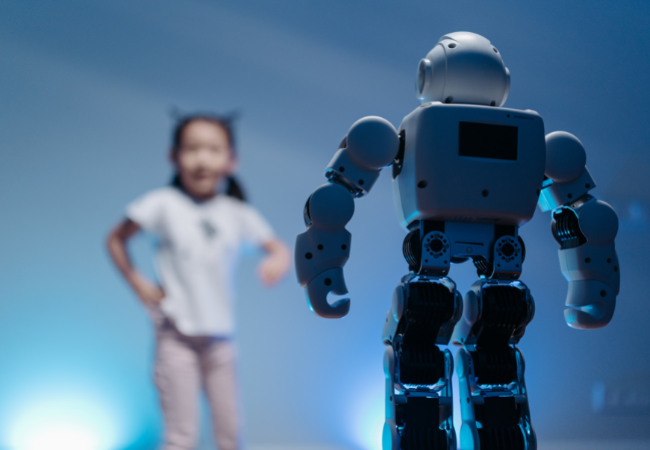

Premium Ai Image Robots Sensory Interaction Ai With Holds A Hand Near The future of multimodal ai. multimodal ai is set to revolutionize human machine interactions, making ai more intuitive, intelligent, and adaptable. upcoming trends: multimodal ai in the metaverse – ai will enhance virtual reality (vr) and augmented reality (ar) experiences. The future of ai: how multisensory technology is reshaping the world the field of artificial intelligence (ai) is rapidly evolving, with researchers constantly pushing the boundaries of what machines can perceive and understand. The multisensory intelligence research group studies the foundations of multisensory artificial intelligence to create human ai symbiosis across scales and sensory mediums. The future of multisensory ai interaction! imagine a world where emotional ai isn't just a futuristic concept but a reality, transforming our daily interacti.

Premium Ai Image Shaking Hands With The Future Human And Ai Collaboration The multisensory intelligence research group studies the foundations of multisensory artificial intelligence to create human ai symbiosis across scales and sensory mediums. The future of multisensory ai interaction! imagine a world where emotional ai isn't just a futuristic concept but a reality, transforming our daily interacti. In virtual reality (vr), haptic technologies are unlocking new dimensions of sensory engagement, from emotional resonance to crossmodal integration with temperature, sound, and vision. multisensory integration: the role of touch and temperature. touch and temperature are deeply intertwined in our perception of the world. For the first time, human to digital human interaction is mediated through hand gesture input and mid air haptic feedback, motivating further research into multimodal and multisensory location based experiences using these and related technologies. The future of multisensory design lies in ai driven adaptive experiences that respond to user emotions and behaviors. The future of artificial intelligence demands a paradigm shift towards multisensory perception—to systems that can digest ongoing multisensory observations, that can discover structure in unlabeled raw sensory data, and that can intelligently fuse useful information from different sensory modalities for decision making.