Vision Language Models For Vision Tasks A Survey Papers With Code This paper provides a systematic review of visual language models for various visual recognition tasks, including: (1) the background that introduces the development of visual recognition paradigms; (2) the foundations of vlm that summarize the widely adopted network architectures, pre training objectives, and downstream tasks; (3) the widely. This paper provides a systematic review of visual language models for various visual recognition tasks, including: (1) the background that introduces the development of visual recognition paradigms; (2) the foundations of vlm that summarize the widely adopted network architectures, pre training objectives, and downstream tasks; (3) the widely.

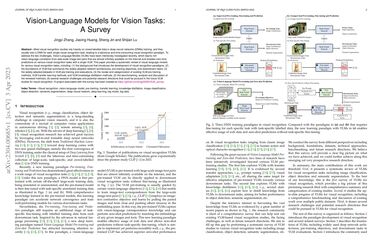

Vision Language Models For Vision Tasks A Survey Papers With Code This paper provides a systematic review of visual language models for various visual recognition tasks, including: (1) the background that introduces the development of visual recognition paradigms; (2) the foundations of vlm that summarize the widely adopted network architectures, pre training objectives, and downstream tasks; (3) the widely. This is the repository of vision language models for vision tasks: a survey, a systematic survey of vlm studies in various visual recognition tasks including image classification, object detection, semantic segmentation, etc. Vision language models (vlms) integrate visual and textual information, enabling a wide range of applications such as image captioning and visual question answering, making them crucial for modern ai systems. however, their high computational demands pose challenges for real time applications. this has led to a growing focus on developing efficient vision language models. in this survey, we. In this paper, we present a comprehensive overview of how vision language tasks benefit from pre trained models. first, we review several main challenges in vision language tasks and discuss the limitations of previous solutions before the era of pre training.

A Survey On Vision Language Action Models For Embodied Ai Papers With Vision language models (vlms) integrate visual and textual information, enabling a wide range of applications such as image captioning and visual question answering, making them crucial for modern ai systems. however, their high computational demands pose challenges for real time applications. this has led to a growing focus on developing efficient vision language models. in this survey, we. In this paper, we present a comprehensive overview of how vision language tasks benefit from pre trained models. first, we review several main challenges in vision language tasks and discuss the limitations of previous solutions before the era of pre training. This paper provides a systematic review of visual language models for various visual recognition tasks, including: (1) the background that introduces the development of visual recognition paradigms; (2) the foundations of vlm that summarize the widely adopted network architectures, pre training objectives, and downstream tasks; (3) the widely. A prompt array keeps the bias away: debiasing vision language models with adversarial learning. in: proceedings of the 2nd conference of the asia pacific chapter of the association for computational linguistics and the 12th international joint conference on natural language processing. 2022, 806–822. Involves models that adapt pre training to the field of vision and language (v l) learning and improve the performance on downstream tasks like visual question answering and visual captioning. To address this constraint, researchers have endeavored to integrate visual capabilities with llms, resulting in the emergence of vision language models (vlms). these advanced models are instrumental in tackling more intricate tasks such as image captioning and visual question answering.