Why Parquet Files Crush Csv For Big Data Analytics

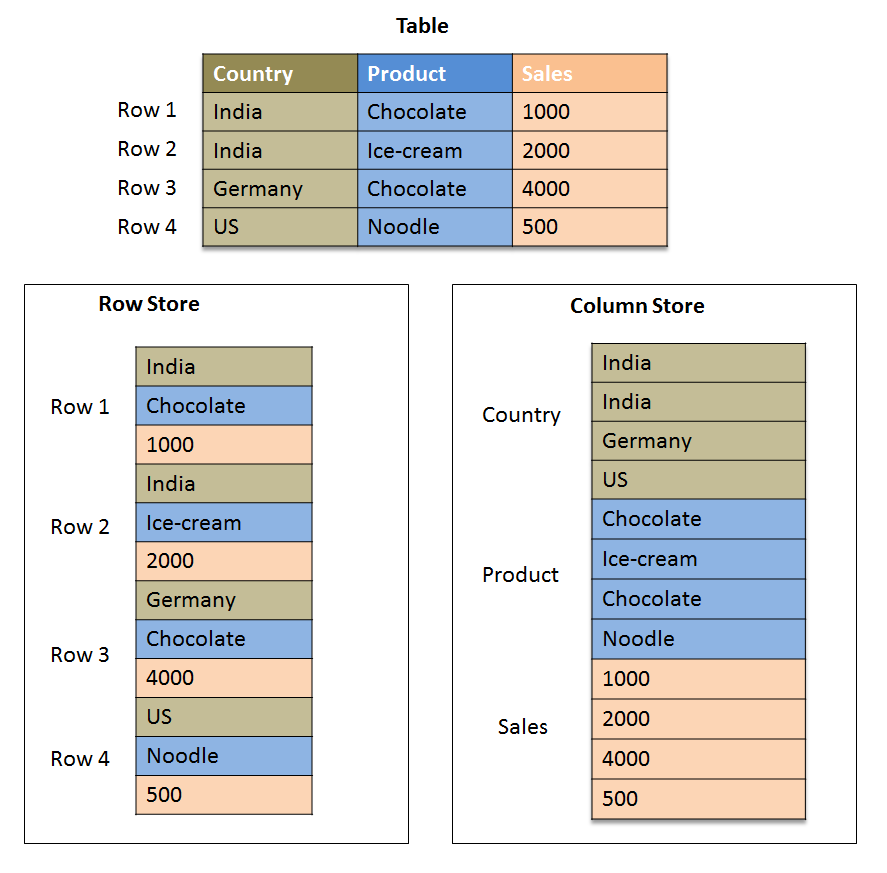

Real Time Big Data Analytics Parquet And Spark Bonus Garren S Apache parquet is a modern, columnar file format that offers significant advantages over traditional text formats like csv or tsv for large scale data engineering and analytics. below, we outline key benefits of parquet in terms of structure, performance, compression, and compatibility, and contrast them with the drawbacks of row based text files. When you use csv, it stores data row by row. csv forces you to read every row loading names and departments you don't need. parquet stores data column by column.

The Secret To Faster Big Data Analytics Parquet Files Explained By Data analytics primarily uses two types of storage format files: human readable text files like csv and performance driven binary files like parquet. this blog post compares these two formats in an ultimate showdown of performance and flexibility, where there can be only one winner. Whether you’re just starting out in data analytics or you’re a veteran data engineer, this guide will show you why parquet files are a true game changer for your data storage needs. Let’s talk about popular file formats like parquet, avro, json, csv, and their fancy cousins—with real examples, actual use cases, and maybe a few jabs at csv (because … why not?). first, why do file formats even matter? think of it like this: your data warehouse is a fridge. Parquet is a columnar storage format. instead of storing data row by row like in csv or json, it stores data column by column. this structural difference has a significant impact on how efficiently data can be read, processed, and compressed, especially at scale.

How To Convert Parquet To Csv File Format In Python Let’s talk about popular file formats like parquet, avro, json, csv, and their fancy cousins—with real examples, actual use cases, and maybe a few jabs at csv (because … why not?). first, why do file formats even matter? think of it like this: your data warehouse is a fridge. Parquet is a columnar storage format. instead of storing data row by row like in csv or json, it stores data column by column. this structural difference has a significant impact on how efficiently data can be read, processed, and compressed, especially at scale. Today, i will debunk the mystery of parquet files and explain why a growing number of data scientists prefer parquet files to csv files. let’s start with an example. Parquet stores data in columns, unlike row based formats like csv or json. this design reduces disk i o for analytical queries. it also supports advanced compression and encoding schemes, including snappy, gzip, brotli, and lzo. self describing, parquet stores metadata and schema in addition to data. In summary, parquet is generally preferred when dealing with large datasets, analytical workloads, and complex data types, as it offers improved storage efficiency and query performance. Parquet is an open source, columnar storage file format optimized for use with big data processing frameworks like apache spark, hadoop, and aws athena. unlike row based formats (e.g., csv, json), parquet stores data by columns, which offers significant performance benefits for analytical queries.

Convert Csv To Parquet Using Pyspark In Azure Synapse Analytics Today, i will debunk the mystery of parquet files and explain why a growing number of data scientists prefer parquet files to csv files. let’s start with an example. Parquet stores data in columns, unlike row based formats like csv or json. this design reduces disk i o for analytical queries. it also supports advanced compression and encoding schemes, including snappy, gzip, brotli, and lzo. self describing, parquet stores metadata and schema in addition to data. In summary, parquet is generally preferred when dealing with large datasets, analytical workloads, and complex data types, as it offers improved storage efficiency and query performance. Parquet is an open source, columnar storage file format optimized for use with big data processing frameworks like apache spark, hadoop, and aws athena. unlike row based formats (e.g., csv, json), parquet stores data by columns, which offers significant performance benefits for analytical queries.

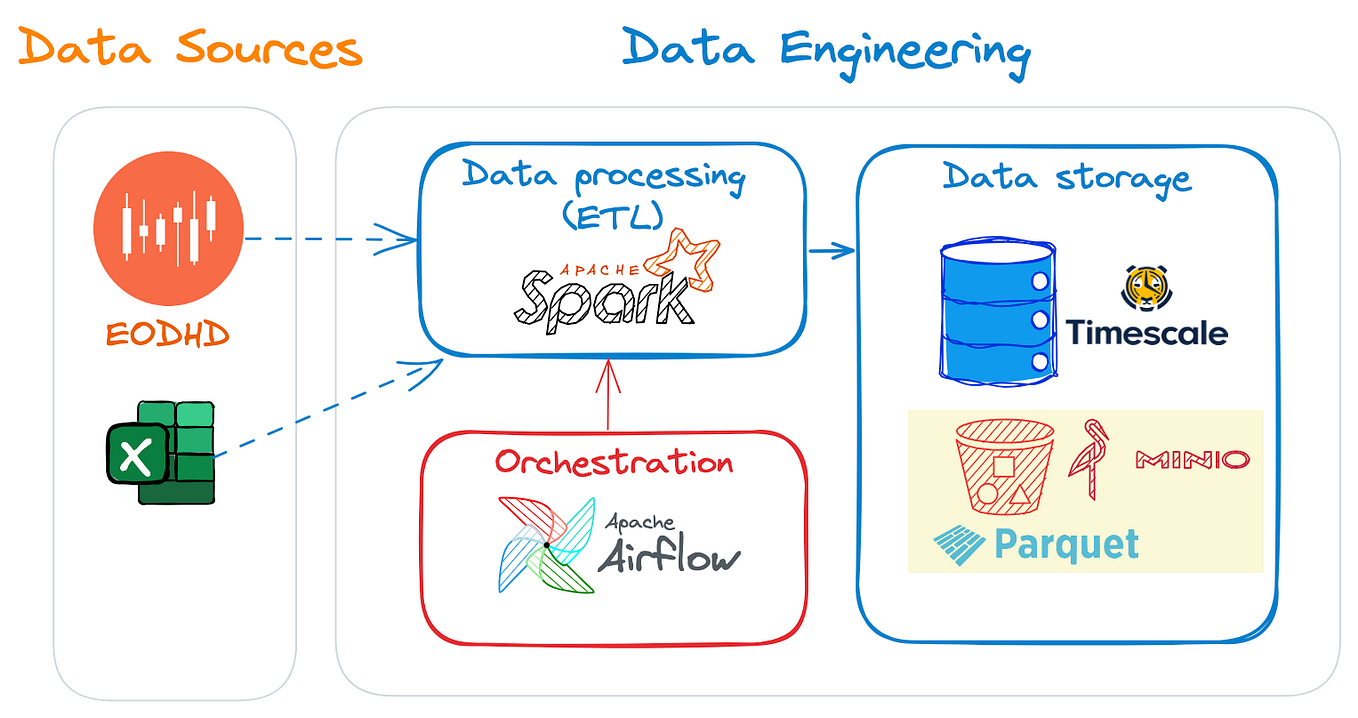

Trading Data Analytics Part 1 First Steps With Duckdb And Parquet In summary, parquet is generally preferred when dealing with large datasets, analytical workloads, and complex data types, as it offers improved storage efficiency and query performance. Parquet is an open source, columnar storage file format optimized for use with big data processing frameworks like apache spark, hadoop, and aws athena. unlike row based formats (e.g., csv, json), parquet stores data by columns, which offers significant performance benefits for analytical queries.

Comments are closed.